Good things of the 21st century

RPG Historian Shannon Appelcline produces a hardback tome on Traveller, how can you not love this?

Just started, but it is good.

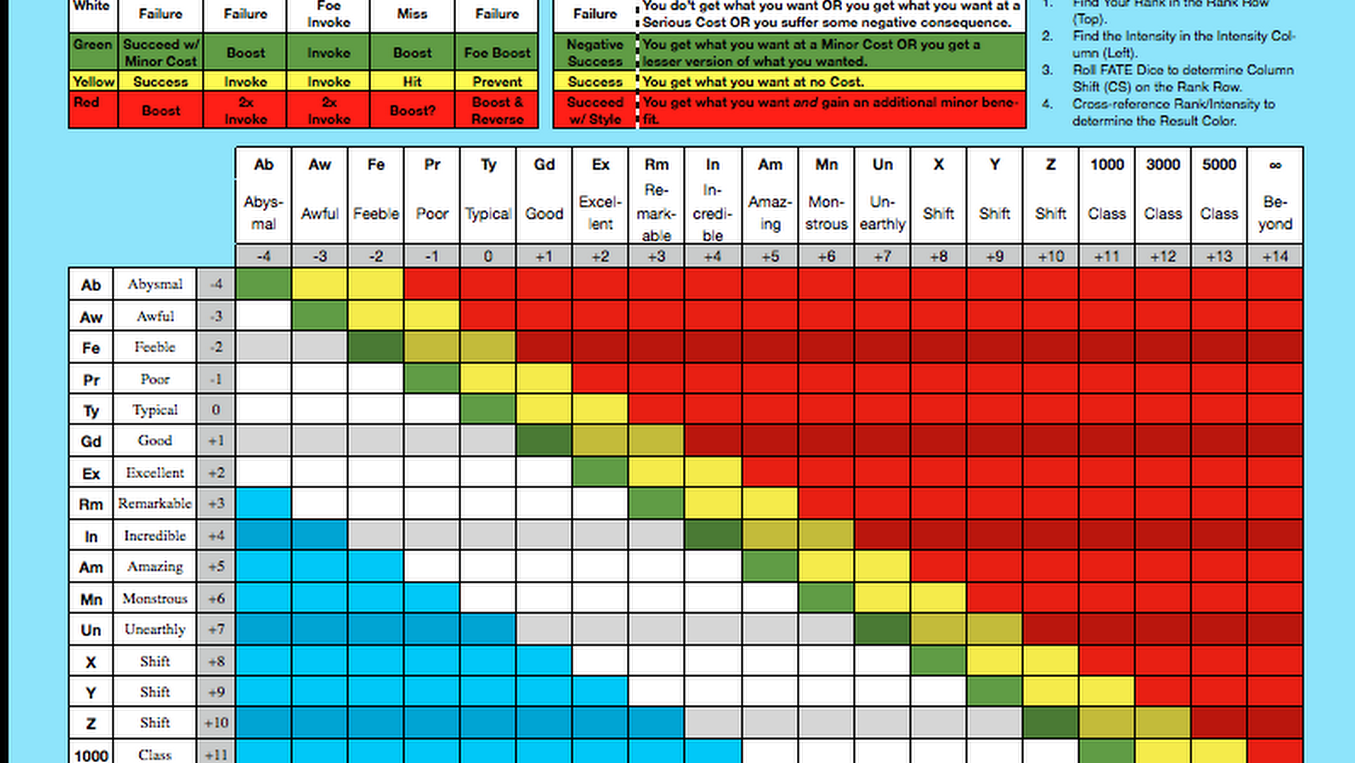

Remarkable, Incredible and Amazing nerdiness

Good things of the 21st century

RPG Historian Shannon Appelcline produces a hardback tome on Traveller, how can you not love this?

Just started, but it is good.

What might be like what in this less than ideal game pdf hackery, here is an example:

These 2 examples, not too bad.

I made a gloVe embedding model based on my game book collection – 7000 odd, of which 6000 or so managed to make it through a first pass pdf extraction pipeline

This framework is quite good https://github.com/NRCan/geoscience_language_models/tree/main/project_tools

https://github.com/NRCan/geoscience_language_models/tree/main/project_tools and parallelises, which is important for big books

The C version of gloVe is superior:

https://github.com/stanfordnlp/GloVe

With some work you can get a python version going, but I wouldn’t recommend for large numbers.

e.g. https://pypi.org/project/glove-py

and associated hacks..

The Notebook associated with this is here: https://github.com/bluetyson/RPG-gloVe-Model

These days microsoft probably won’t let you see something that big online, so will make a series of post excerpts.

Here’s a google doc with a list

https://docs.google.com/spreadsheets/d/1fWzs6hRpx8ZTOpLWAUy7B0wRwopkzWC0yvr8i-7r1pQ/edit#gid=0

https://shutteredroom.blogspot.com/2023/12/slaine-and-ukko-for-basic-games.html

On creating these sort of inspiration-for-genre heroes in D&D games.

In this case, the pair from 2000AD.

Campaign wiki style.

https://alexschroeder.ch/view/2023-08-13_Random_generators_on_wiki_pages

https://github.com/modality/abulafia

The old random generators – instructions on how to get this running again.

This is a classic move in this game recap

https://barkingalien.blogspot.com/2023/12/kineto-returns-inthe-return-of-return.html

Cryptid megaladons make good game monsters. Bonus points for black and attacking at night.

This would be good to turn a fantasy adventure version of into a megaladon.